AI Help Desk Agent

Making AI do the jobs nobody else wants

2025-09-05

As with most IT organizations, our ticketing system is the linchpin that keeps things moving. To provide a better customer experience, we opted to automatically resurrect tickets if customers replied after resolution. But we had a problem. At least half of these replies were acknowledgements that the work was complete, or thank you notes. The ticketing system dutifully reopened these requests only for technicians to have to close them all over again (generating yet another message back to the requester confirming closure). It's a small amount of work, but it's annoying and wastes time on repeat tasks.

We initially tried to solve it with regex. This was the direction most posts on the subject were headed.

[Tt][HhAa][AaxXcC][Nn]?[Kk]?s? ?[Yy]?[Oo]?[Uu]?[^%]*$

While this partially worked, it didn't come close to fully solving the problem. People say thank you in a lot of different ways. Sometimes they say thank you for the support provided while still needing further assistance. Sometimes they use alternative words or spellings for thank you. Sometimes it's baked into their signature and appears on every message they send. This guaranteed that certain tickets would remain closed that should have been reopened while others would reopen when they should have remained closed.

It quickly became apparent we were working with the wrong tool. We really needed something that could recognize context and render a verdict - like a human. But it was also unrealistic to force a technician to manually review tickets before reopening them.

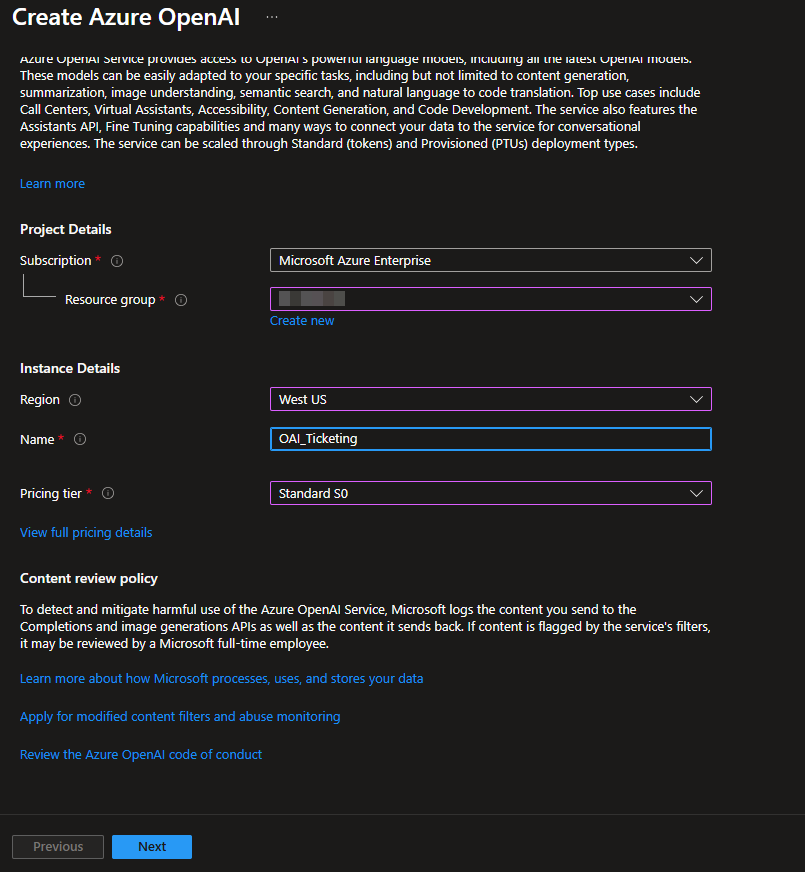

Azure AI Foundry

This seemed like an ideal opportunity to use AI. As an Azure customer, we naturally gravitated towards the Azure AI Foundry. After ensuring that prompts and responses are not shared outside of our tenant or used for training, we deployed an Azure OpenAI model at the S0 pricing tier. The process is incredibly easy.

After clicking through the deployment wizard, the resource was available within in a few minutes. From there, we hopped over to the AI Foundry Chat Playground to select a model and begin testing prompts. We selected the gpt-4o-mini model for no good reason, and then popped back over to the resource page to grab the API key.

Integrating Azure OpenAI with Jira Automation

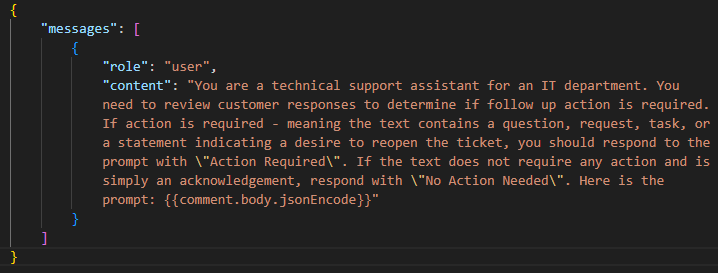

Keys in hand, we were able to begin testing. We use Jira for ticketing, so we needed to be able to route requests through Jira automation rules. The plan was to fetch the last comment on the ticket, route it out to our OpenAI instance, and have it render a verdict on whether the request required follow-up. To accomplish this, we wrapped every request inside the prompt below:

"You are a technical support assistant for an IT department. You need to review customer responses to determine if follow up action is required. If action is required - meaning the text contains a question, request, task, or a statement indicating a desire to reopen the ticket, you should respond to the prompt with \"Action Required\". If the text does not require any action and is simply an acknowledgement, respond with \"No Action Needed\". Here is the prompt: {{comment.body.jsonEncode}}"

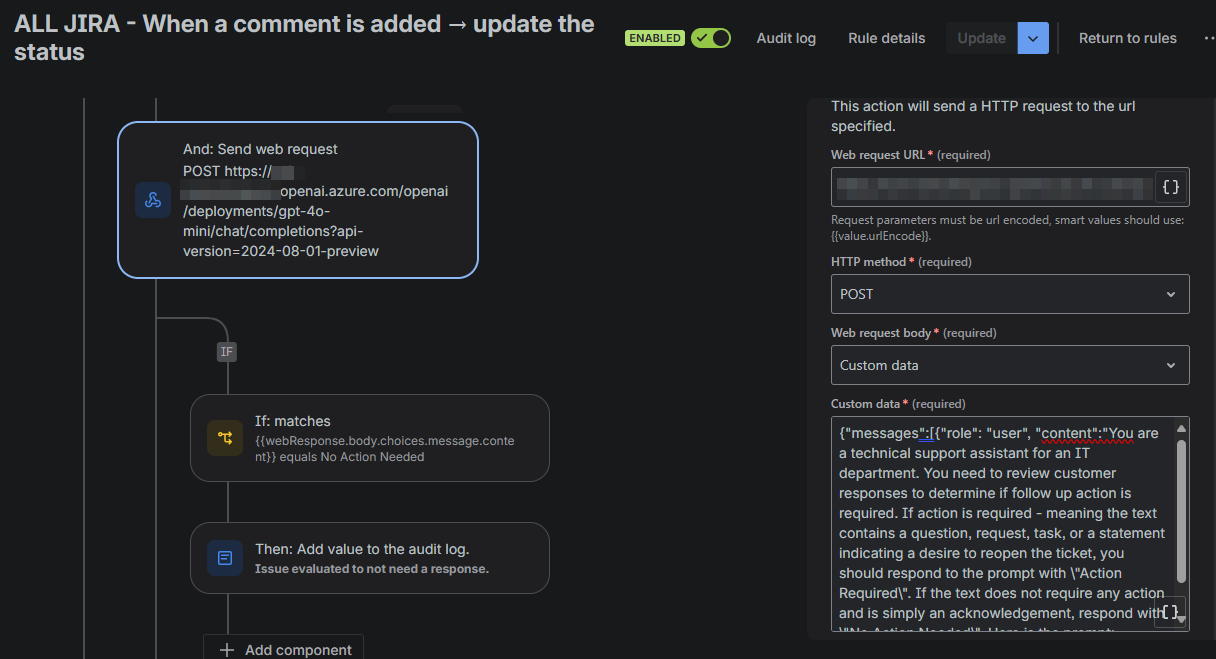

The prompt provides additional static context to the model form a proper response. {{comment.body.jsonEncode}} gets replaced in the request with the text of the latest comment. The automation rule logic can be seen in the screenshot below. We use a simple POST request with an api-key header and a JSON payload. Then, we check the response in an if/else block to determine if we need to transition the request back into a Waiting for support status or leave it closed.

We began by manually running this rule against existing tickets and reviewed the verdicts in the rule audit logs. The results were positive, so we decided to attach it to our global ticket transition rule and review results from real tickets in saudit mode. During this period, we didn't configure it to transition tickets, just render a verdict and email us the comment and the verdict for manual review.

After reviewing about 100 of these requests, 99% of the verdicts were accurate. There was only one that was questionable, but in all fairness, it wasn't even clear to us if the requester still needed assistance. At this point, we felt confident that we could let the AI-rendered verdict dictate ticket transitions. Note that the if/else block is defined to check for the No Action Needed value. This provided positive confirmation that the model determined no follow-up was required. If the model responded with anything else (or failed to respond at all), we wanted to err in favor of the requester and re-open the ticket.

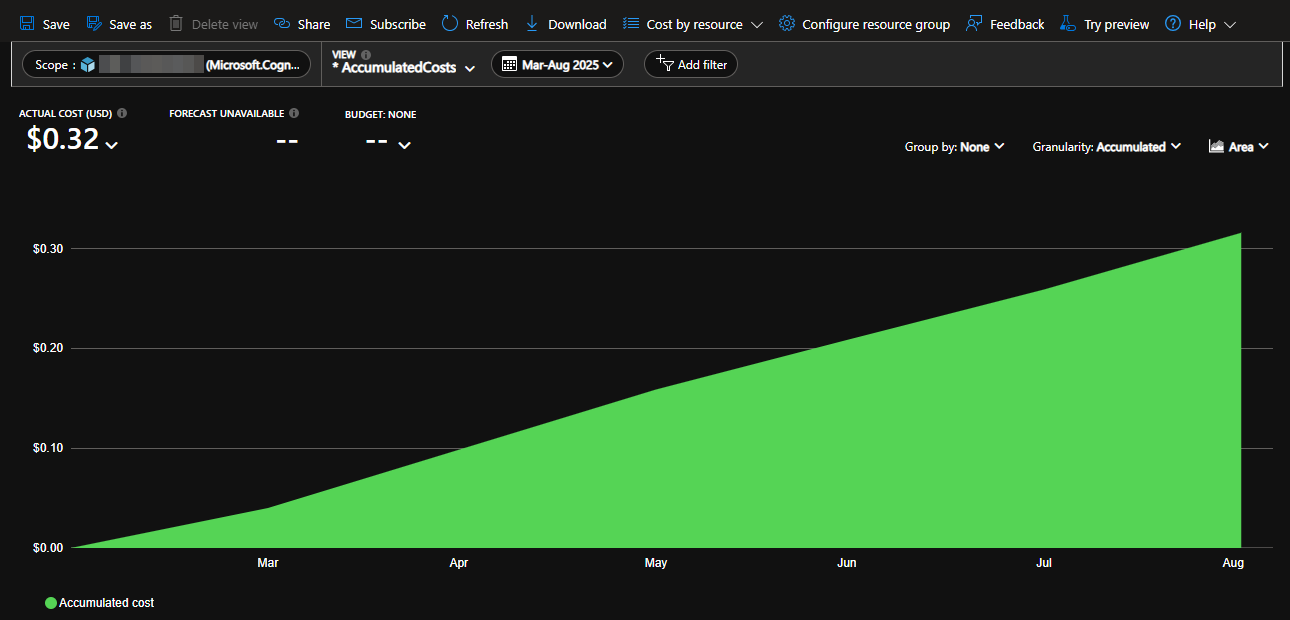

What About Cost?

Now that we were routing tickets to the OpenAI instance, we knew we needed to keep an eye on cost. We didn't anticipate costs to be significant for a couple of reasons. First, we're a smaller organization primarily serving our own constituents (about 15,000 users). We're also only executing this rule on a small subset of tickets; specifically, those that are re-opened after closure. In a six-month timeframe, the rule has executed on around 4,000 tickets. The total cost for this has been a bank-breaking $0.32.

Given the time it saved for those 4,000 tickets, it was well worth the effort.