Exchange Tar Pit

Troubleshooting Exchange Server Throttling

2025-08-29

In the never-ending process of cleaning up technical debt, we focused in on retiring an internal SMTP relay server. The server was blocked from routing mail to the Internet long ago. Instead, it passed messages along to the next hop; an internal Exchange server. The Exchange server already provided anonymous relay to trusted internal networks, so the legacy server existed to prevent disruption for services that happened to still be using it.

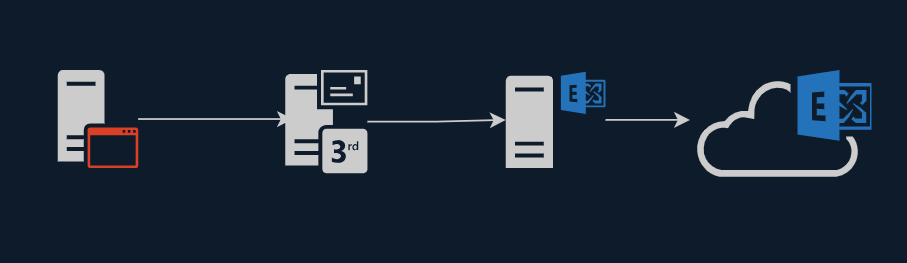

The diagram below represents our original relay flow. It's pretty straightforward - the extra mail server is the second from the left.

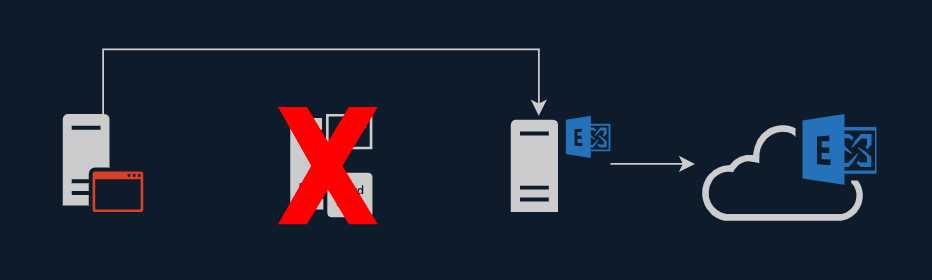

To bypass the legacy server, we pointed its DNS records directly to the Exchange server. It was only being used for anonymous access over port 25, so we didn't need to worry about SSL. For the most part, the change was quietly tolerated, and no issues were reported immediately. The new architecture bypassing the legacy server is represented below.

Within a couple of weeks, we received a ticket indicating a large batch process in our ERP system took several hours longer than usual, and it appeared to be delayed while sending email. The issue more closely coincided with a tools upgrade on the application server, so that was the first place we looked. A warning message related to write operation failures seemed to be the smoking gun that the tools upgrade was the culprit. However, correcting the issue shaved seconds off the total processing time, not hours.

Throttling

The relay was the next logical place to look; particularly, the throttling settings in Exchange. If the application server connected to the relay per-message, it was possible it was quickly exhausting its available connections. In the previous architecture, Postfix may have buffered this channel by accepting the entire batch of messages and feeding them through the Exchange relay.

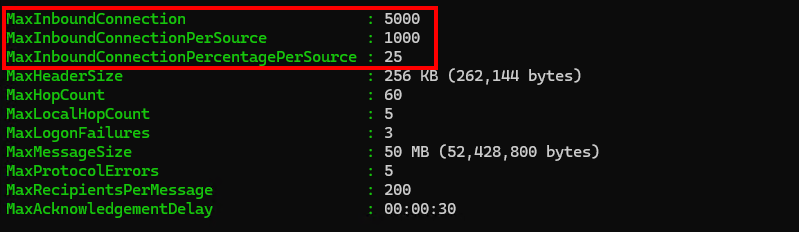

The first settings we checked were the inbound connection limits on the relay receive connector using the command below.

Get-ReceiveConnector "EXSERVER1\Relay" | fl *Max*

The output of the command will look something like below:

Note that the settings above are modified. Explanations for these settings and their default values are included below.

- MaxInboundConnection (Default: 5000). This setting limits the total number of established connections from inbound servers on this relay. In our case, the default of 5000 was more than enough.

- MaxInboundConnectionPerSource (Default: 100). This indicates the maximum number of connections coming from a single source IP at a given time. In our case, a single application server potentially needed to establish hundreds of connections at a time. Since the server is not exposed to the Internet, and the number of internal services accessing the relay is small, allowing a single source a large share of the total available connections is low-impact.

- MaxInboundConnectionPercentagePerSource (Default: 2). This setting indicates the maximum number of connections permitted from a single source on the receive connector "expressed as the percentage of available remaining connections on a Receive connector" (1). Note that this setting is applied in conjunction with, not instead of, the previous setting.

At first glance, it might seem like the per-source settings are redundant. However, let's assume a relay is configured with a maximum cap of 1000 connections, a per-source cap of 500 connections, and a per-source percentage cap of 60-percent. Assuming there are no connections to the relay at the time a given source connects, that source could establish 500 connections with the relay, which is the MaxInboundConnectionPerSource limit.

Now assume the relay is busy managing 800 connections when a new source establishes a connection. There are 200 available connections before the cap is reached. The maximum percentage per-source limits the new occupant to 60-percent of 200 available connections for a maximum of 120 connections. This is well under the 500 per-source limit. The purpose of this setting is to prevent a single consumer from exhausting available connections during busy periods.

For our purposes, we don't expect the total connections to get anywhere near the 5000 maximum. It's unlikely we'll have have a case where the percentage cap will kick in. Setting MaxInboundConnectionPerSource to 1000 provides plenty of room for regular large batches from our application server.

With these settings applied, we tested a small batch...

...and failed.

Throttling Revisited

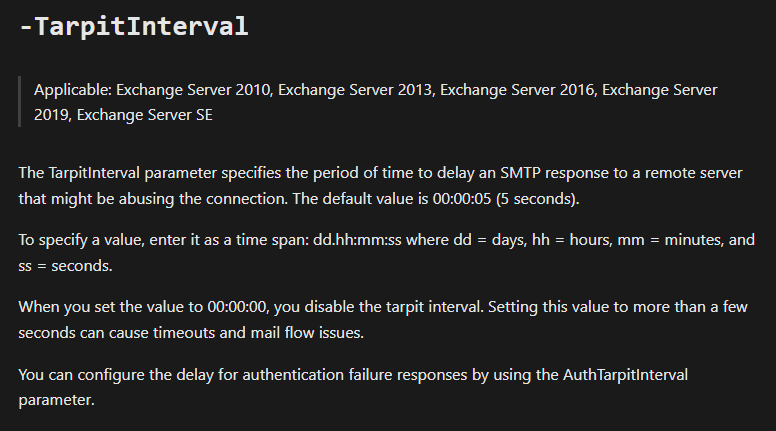

The application logs showed that every message still took over 5-seconds to send. I stumbled across the parameter below when revisiting the documentation for additional throttling settings.

Bingo. There's an artificial 5-second delay added to inbound connections. The phrase "that might be abusing the connection" is misleading. Exchange isn't evaluating the server's reputation before subjecting it to the tarpit. On anonymous relays, every incoming connection is subjected to the tarpit. And since our application server makes individual connections for every message, the process was artificially delayed by five-seconds thousands of times over.

If you trust all your incoming connections on the relay, there's a simple fix:

Set-ReceiveConnector "EXSERVER1\Relay" -TarpitInterval "00:00:00"

This effectively disables the tarpit interval and allows mail to flow without delay. We tested again after applying this change, and the job completed in the expected timeframe.

If you don't trust all your incoming connections but you need to permit specific IPs to connect unhindered by the tarpit, create a new relay, scope it to your trusted IPs, and set the tarpit on the new relay to 00:00:00.